[CS229] Lecture 5 Notes - Descriminative Learning v.s. Generative Learning Algorithm

-

date_range Feb. 18, 2019 - Monday infosortNoteslabelmachine learningcs229

Keep Updating:

- 2019-02-18 Merge to Lecture #5 Note

- 2019-01-23 Add Part 2, Gausian discriminant analysis

- 2019-01-22 Add Part 1, A Review of Generative Learning Algorithms.

- 1. Basics of Generative Learning Algorithms

- 2. Gaussian Discriminant Analysis (GDA)

- 3. Naive Bayes

- Reference

1. Basics of Generative Learning Algorithms

(01/22/2019)

1.1. An Example to Explain the Initiative Ideas:

Consider a classification problem in which we want to learn to distinguish between cats \((y=0)\) and dogs \((y=1)\):

Approach I:

Based on some features of an animal, given a training set, an algorithm like logistic regression or perceptron algorithm, tries to find a decision boundary (e.g. a straight line) to separate the cats and the dogs.

To classify a new animal:

Just check on which side of the decision boundary it falls.

Approach II:

First, look at cats, build a model of what cats look like. Then, look at dogs, build another model of what dogs look like.

To classify a new animal:

Match the new animal against the cat model and the dog model respectively, to see whether the new animal looks more like the cats or more like the dogs we had seen in the training set.

1.2. Definitions:

Discriminative learning algorithms:

Algorithms that try to learn \(p(y \vert x)\) directly (such as logistic regression) or algorithms that try to learn mappings directly from the space of input \(\chi\) to the labels \({0,1}\) (such as perceptron algorithm) are called discriminative learning algorithms.

Generative learning algorithms:

Algorithms that instead try to model \(p(x \vert y)\) and \(p(y)\) are called generative learning algorithms.

1.3. More about Generative Learning Algorithms:

Continue with the example of cats and dogs, if \(y\) indicates whether an sample is a cat (0) or a dog (1), then

- \(p(x \vert y=0)\) models the distribution of cats’ features.

- \(p(x \vert y=1)\) models the distribution of dogs’ features.

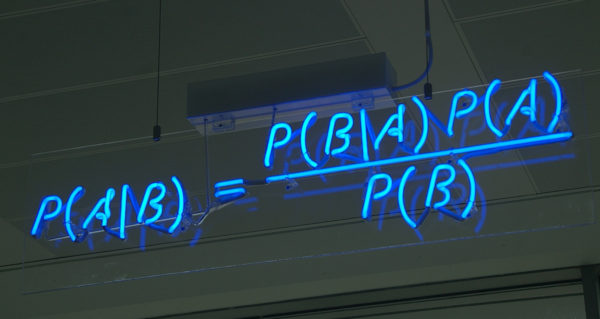

After modeling \(p(y)\) (called the class priors) and \(p(x \vert y)\), our algorithm can then use Bayes Rule to derive the posterior distribution on \(y\) given \(x\):

Here, the denominator is given by \(p(x)=\sum_i p(x \vert y_i)p(y_i)\). In cats & dogs example, \(p(x)=p(x \vert y=0)p(y=0)+p(x \vert y=1)p(y=1)\).

Actually, if we we’re calculating \(p(y \vert x)\) in order to make a prediction, then we don’t need to calculate the denominator, since

2. Gaussian Discriminant Analysis (GDA)

(01/23/2019)

In this model (GDA), we assume that \(p(x \vert y)\) is distributed according to a multivariate normal distribution. First let’s talk briefly about the properties of multivariate normal distributions.

2.1. The multivariate normal distribution

The Definition of so-called multivariate normal distribution:

- A distribution with a mean vector \(\mu \in \mathbb{R}^n\) and a covariance matrix \(\Sigma \in \mathbb{R}^{n \times n}\), where \(\Sigma \geq 0\) is symmetric and positive semi-definite. Also written “\(\mathcal{N}(\mu, \Sigma)\)”, it’s density is given by:

where “\(\vert\Sigma\vert\)” denotes the determinant of the matrix \(\Sigma\).

- For a random variable \(X\) distributed \(\mathcal{N}(\mu, \Sigma)\), the mean is given by \(\mu\):

- The covariance of a vector-valued random variable \(Z\) is defined as \(\text{Cov}(Z) = \text{E}[(Z-\text{E}[Z])(Z-\text{E}[Z])^T]\), which can also be written as \(\text{Cov}(Z) = \text{E}[ZZ^T]-(\text{E}[Z])(\text{E}[Z])^T\). If \(X \sim \mathcal{N}(\mu, \Sigma)\), then:

2.2. The Gaussian Discriminant Analysis model

(02/18/2019)

When $x$ are continuous-valued variables, we can use the so-called Gaussian Discriminant Analysis (GDA) which using multivariate normal distribution to model the different cases:

which can be written as:

Now the parameters are: $\phi, \mu_0, \mu_1, \Sigma$. Apply maximum likelihood estimate, the log-likelihood would be:

(TO DO: Add the derivation)

We get the maximum likelihood estimate of these parameters:

Predict:

Simply apply the rules mentioned above, with these estimates:

2.3. GDA and logistic regression

With the predicting rules above, we have:

where $\theta$ is some appropriate function of $\phi,\Sigma,\mu_0,\mu_1$. This is exactly the form that logistic regression, a discriminative algorithm, to model $p(y=1 \vert x)$.

Assumption relations:

Which is better?

- When $p(x \vert y)$ is really Gaussian, GDA is asymptotically efficient!

- By making weaker assumptions logistic regression is more robust, especially the data is indeed non-Gaussian, then in the limit of large datasets, logistic regression will almost always do better than GDA. For this reason, in practice logistic regression is used more often than GDA.

3. Naive Bayes

(02/18/2019)

As GDA is for continuous $x$ problems, here Naive Bayes is for those problems with discrete $x$.

Here’s the typical example: spam filter.

First, we present the email(text) via a feature vector whose length is the size of word dictionary (a fixed number). If the email contains the $j$-th word, $x_j=1$; otherwise $x_j=0$. The vector would like like this:

If our vocabulary size is 50000, then $x \in \{ 0, 1 \}^{50000}$ ($x$ is a 50000-dimensional vector of 0’s and 1’s). If we were to model $x$ discriminatively/explicitly with multinomial distribution over the $2^{50000}$ possible outcomes, the we’d end up with a ($2^{50000} -1$)-dimensional parameter vector, which is not appliable.

Naive Bayes (NB) assumption:

- To model $p(x \vert y)$, we have to assume that the $x_i$’s are conditionally independent given $y$.

- Under such assumption, the resulting algorithm is called the Naive Bayes classifier.

Then, we have:

Our model now is parameterized by:

The likelihood of the data:

Then we can get the maximum likelihood estimates:

In the equation above, the “$\wedge$” symbol means “and”. The estimates have a very natural interpretation. For instance, $\phi_{j\vert y=1}$ is just the fraction of spam $(y=1)$ emails in which word $j$ does appear.

Predict:

To make a prediction on a new sample with feature $x$, we then simply calculate:

and pick the class has the higher posterior probability.

Laplace smoothing

Here is a problem:

- If there is a new word ($k$-th in the dictionary) appeared in the email text, which is not included in you training set. Then,

Then,

To avoid this, we introduce Laplace smoothing, which replace the estimate with:

Then the estimates is updated as:

Note: In practice, it usually doesn’t matter much whether we apply Laplace smoothing to $\phi_y$ or not, since we will typically have a fair fraction each of spam and non-spam message, so $\phi_y$ will be a reasonable estimate of $p(y=1)$ and will be quite far from 0 anyway. WHY?

Reference

KF